Continued from Part 1, this blog post continues with what I learnt from the Coursera course on Generative AI.

LICENSE: Images shown in this blog post and following parts of the blog are screenshots taken from the Generative AI course. You may not use or distribute these or the text content for commercial purposes. It was created by DeepLearning.ai, and is licensed under a creative commons license.

Here are some of the main points:

Instruction fine-tuning

Limitation of in-context learning:

- Poor results for smaller LLMs, even when 5 or 6 exemplars are given.

- Multiple examples take up more space in the context window.

So, fine-tuning is done to further train the model to improve performance.

Pre-training is self-supervised learning, where an LLM is trained using vast unstructured text data.

Fine-tuning, is supervised learning, where labeled task-specific examples (prompt-completion pairs) update the LLM's weights. It improves the model's ability to provide good completions for a specific task, often requiring just 500 to 1000 examples.

Instruction fine-tuning is just one of the ways to do it.

So if you are fine-tuning the model to perform summarizations, you'd use exemplars like:

Summarize the following text:[EXAMPLE TEXT][EXAMPLE COMPLETION]

If you were fine-tuning for translation tasks:

Translate this sentence to:[EXAMPLE TEXT][EXAMPLE COMPLETION]

Full fine tuning

All the model weights are updated. It needs enough memory and compute to store and process all the gradients, optimizers, etc.

There are many curated libraries of such prompt templates. One example is shown here.

Fine tuning steps:

- Select prompts from your training data set and pass them to the LLM, which generates completions.

- Compare LLM completion with the response specified in the training data. The LLM output is a probability distribution across tokens. So you can compare the distribution of the completion and that of the training label and use a standard crossentropy function to calculate loss between the two token distributions.

- Then use the calculated loss to update your model weights in standard backpropagation. This is done for many batches of prompt completion pairs and over several epochs.

- Update the weights so that the model's performance on the task improves.

- Define separate evaluation steps to measure LLM performance using the holdout validation data set. This will give the validation accuracy.

- After completing fine tuning, perform a final performance evaluation using the holdout test data set.

- This will give the test accuracy.

Fine-tuning results in a new version of the base model, often called an instruct model that is better at the tasks you are interested in.

Avoiding Catastrophic Forgetting

Catastrophic forgetting happens because the full fine-tuning process modifies the weights of the original LLM. It leads to great performance on the single fine-tuning task, but degrades performance on other tasks.

1. Decide if catastrophic forgetting actually impacts your use case. If all you need is reliable performance on the single task you fine-tuned on, it may not be an issue that the model can't generalize to other tasks. If you need the model to maintain its multitask generalized capabilities, you can perform fine-tuning on multiple tasks at one time. Good multitask fine-tuning may require 50-100,000 examples across many tasks.

2. Perform Parameter Efficient Fine-Tuning (PEFT) instead of full fine-tuning. PEFT is a set of techniques that preserves the weights of the original LLM and trains only a small number of task-specific adapter layers and parameters.

Multi task instruction fine tuning

Fine tuned LAnguage Net (FLAN) is a specific set of instructions used to perform instruction fine tuning. When the T5 model is fine tuned with FLAN, it's called FLAN-T5. Similarly, there's FLAN-PALM. The image below shows how many datasets FLAN-T5 was trained with for multi-instruction.

It's important to use datasets that are closer in similarity to the real-world application of the model. This can often involve an additional iteration of fine-tuning with human-curated examples from actual examples of where the model was used and where it was seen that the model performed poorly.

Model evaluation metrics

In traditional Neural Networks, you can use accuracy=correct predictions/total predictions, because the models are deterministic. In LLMs, the non-deterministic output and language-based evaluation is difficult.

- Recall-Oriented Understudy for Gisting Evaluation (ROUGE) scoring: Assesses the quality of automatically generated summaries by comparing them to human-generated reference summaries.

- BiLingual Evaluation Understudy (BLEU): Evaluates the quality of machine-translated text, by comparing it to human-generated translations.

|

| "gram" just means "word" |

With bigrams, you work with pairs of words, which indicate in a small way, the ordering of the sentence. Scores would be even lower with larger sentences, thereby better capturing the difference in sentences.

Rather than expand this concept with n-grams, we use the Longest Common Subsequence (LCS). The three scores (recall, precision, f1) together are the ROUGE-L score. The ROUGE score of a particular task (like summarization) cannot be used to compare with the ROUGE score of another task (like translation).

ROUGE clipping is used when there are repetitive words. So only one of the repeating words is considered. There is of course an issue if all the words of a sentence are re-ordered.

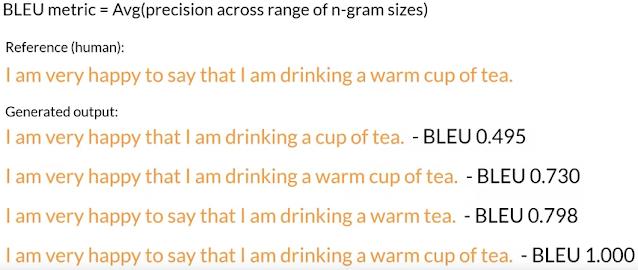

BLEU score is calculated a bit differently, since it's for translation. We average the precision across a range of different n-gram sizes. If you were to calculate this by hand, you would carry out multiple calculations and then average all of the results to find the BLEU score. As we get closer and closer to the original sentence, we get a score that is closer and closer to 1.

You can use them for simple reference as you iterate over your models, but you shouldn't use them alone to report the final evaluation of a large language model. Use rouge for diagnostic evaluation of

summarization tasks and BLEU for translation tasks. For overall evaluation of your model's performance, however, you will need to look at one of the evaluation benchmarks like GLUE, SuperGLUE, HELM, MMLU (Massive Multitask Language Understanding), BIG-bench, etc.

Benchmarks

Simple evaluation metrics like ROUGE and BLEU scores can only tell you very little about the capabilities of your model. To measure and compare LLMs holistically, you can make use of pre-existing datasets and associated benchmarks established by LLM researchers specifically for this purpose.

Selecting the right evaluation dataset is vital, to accurately assess LLM performance and understand its true capabilities. It's useful to select datasets that isolate specific model skills, like reasoning or common sense knowledge, and those that focus on potential risks, such as disinformation or copyright infringement.

An important issue that you should consider: whether the model has seen your evaluation data during training. You'll get a more accurate and useful sense of the model's capabilities by evaluating its performance on data that it hasn't seen before.

- General Language Understanding Evaluation (GLUE): Introduced in 2018. It's a collection of natural language tasks, such as sentiment analysis and question-answering, created to encourage the development of models that can generalize across multiple tasks, You can use it to measure and compare model performance.

- SuperGLUE is a successor to GLUE, created in 2019, to address GLUE's limitations. It consists of a series of tasks, some of which are not included in GLUE, and some of which are more challenging versions of the same tasks. Tasks like multi-sentence reasoning, and reading comprehension.

As models get larger, their performance against benchmarks such as SuperGLUE start to match human ability on specific tasks, but subjectively we can see that they're not performing at human level at tasks in general.

- Massive Multitask Language Understanding (MMLU): Released in 2021, it's designed specifically for modern LLMs. To perform well models must possess extensive world knowledge and problem-solving ability. Models are tested on elementary mathematics, US history, computer science, law, and more.

- BIG-bench: Introduced in 2022, currently consists of 204 tasks, ranging through linguistics, childhood development, math, common sense reasoning, biology, physics, social bias, software development and more. BIG-bench comes in three different sizes, to keep costs achievable, as running these large benchmarks can incur large inference costs.

- Holistic Evaluation of Language Models (HELM): It aims to improve the transparency of models, and to offer guidance on which models perform well for specific tasks. HELM takes a multi-metric approach, measuring seven metrics across 16 core scenarios, ensuring that trade-offs between models and metrics are clearly exposed. One important feature of HELM is that it assesses on metrics beyond basic accuracy measures, like precision of the F1 score. The benchmark also includes metrics for fairness, bias, and toxicity, which are becoming increasingly important to assess as LLMs become more capable of human-like language generation, and in turn of exhibiting potentially harmful behavior. HELM is a living benchmark that aims to continuously evolve with the addition of new scenarios, metrics, and models.

Parameter efficient fine-tuning (PEFT)

Full fine-tuning requires memory not just to store the model, optimizer states, gradients, forward activations, and temporary memory throughout the training process. PEFT only updates a small subset of parameters. Some PEFT techniques freeze most of the model weights and focus on fine tuning a subset of existing model parameters, for example, particular layers or components. Other techniques don't touch the original model weights at all, and instead add a small number of new parameters or layers and fine-tune only the new components. With PEFT, most if not all of the LLM weights are kept frozen. As a result, the number of trained parameters is sometimes just 15-20% of the original LLM weights. So PEFT can often be performed on a single GPU. And because the original LLM is only slightly modified or left unchanged, PEFT is less prone to the catastrophic forgetting problems of full fine-tuning. The new parameters are combined with the original LLM weights for inference. PEFT weights are trained for each task and can be easily swapped out for inference, allowing efficient adaptation of the original model to multiple tasks.

|

| New parameters augmented with the original LLM |

PEFT tradeoffs

There are several methods you can use for parameter efficient fine-tuning, each with trade-offs on:

- Parameter efficiency

- Memory efficiency

- Model performance

- Inference costs

- Training speed

PEFT methods

- Selective methods: They fine-tune only a subset of the original LLM parameters. You can train only certain components of the model or specific layers, or even individual parameter types.

- Reparameterization: These work with the original LLM parameters, but reduce the number of parameters to train by creating new low rank transformations of the original network weights. A commonly used technique of this type is LoRA.

- Additive methods: These carry out fine-tuning by keeping all of the original LLM weights frozen and introducing new trainable components. There are two main approaches: Adapter methods add new trainable layers to the architecture of the model, typically inside the encoder or decoder components after the attention or feed-forward layers. Soft prompt methods, keep the model architecture fixed and frozen, and focus on manipulating the input to achieve better performance. This can be done by adding trainable parameters to the prompt embeddings or keeping the input fixed and retraining the embedding weights.

PEFT: Low Rank Adaptation (LoRA) of LLMs

It's a PEFT technique in the re-parametrization category. A transformer typically has weights of dimension 512 * 64 = 32768. But with LoRA of rank = 8, matrix A has dimensions 8 * 64 = 512, and matrix B has dimensions 512 * 8 = 4096. That's 4096 + 512 = 4608 parameters, which is an 86% reduction in parameters to train, and can be done with a single GPU.

To train for a particular task, you'd train the LoRA matrices A and B, and then multiply them and add them to the original frozen weights (depicted by the snowflake). The summed matrix replaces the original weights of the model. In the same way, you can train for a different task and do similar replacement.

Deciding the best rank for LoRA matrices:

|

| LoRA rank, resulting loss values and various metrics |

Researchers observed that the validation loss plateaued after rank sizes of 16. The numbers in bold show the best values obtained for each metric and validation loss. So you could choose a rank ranging from 4 to 16, but beyond 16, there's no benefit. Training all the weights of the model does give better results than LoRA, but given the flexibility and lesser compute of LoRA, the slight loss of performance is forgivable.

PEFT: Prompt Tuning with Soft Prompts

Prompt tuning is not prompt engineering. The problem with prompt engineering is that it can require a lot of manual effort to write and try different prompts. You're also limited by the length of the context window, and at the end of the day, you may still not achieve the desired performance.

|

| Pre-pended soft prompts shown in yellow |

With prompt tuning, you add additional trainable tokens to your prompt and leave it up to the supervised learning process to determine their optimal values. The set of trainable tokens is called a soft prompt, and it gets pre-pended to embedding vectors that represent your input text. Approximately 20 and 100 virtual tokens can be sufficient for good performance. Soft prompts can easily be switched with a different set of trained soft prompts, for a different task.

1. Embedding space: Tokens typically are in a fixed location in the embedding vector space. However, soft prompts are not fixed discrete words of natural language. Instead, they are virtual tokens that can take on any value within the continuous multidimensional embedding space (however, they do form tight semantic clusters with fixed tokens, meaning the clusters have words with similar meanings). Through supervised learning, the model learns the values for these virtual tokens that maximize performance for a given task.

2. Weight updation: In full fine tuning, the training data set consists of input prompts and

output completions or labels. The weights of the large language model are updated during supervised learning. In contrast, with prompt tuning, the weights of the large language model are frozen and the underlying model does not get updated. Instead, the embedding vectors of the soft prompt gets updated over time to optimize the model's completion of the prompt.

Continued in Part 3.

No comments:

Post a Comment